In the field of Machine Learning, an increasingly popular topic is Model Monitoring, i.e. the continuous tracking of a model’s performance in production, which is an important component of any complete MLOps (or Machine Learning Operations) solution.

Model Monitoring includes the identification and tracking of a variety of possible issues, such as the quality of input data, the predicting performance of the model, and the “health” metrics of the system (including usage of computational resources, network I/O, etc.). Recently, one of the most discussed issues arising in ML systems in production is Model Degradation, i.e. the worsening of its prediction performance over time, which has been extensively researched in studies like [Vela et al.], where authors observed temporal model degradation in 91% of the ML models tested.

This study, and many others (see [Bayram et al.]), point out Data Drift as one of the principal causes for model degradation; another cause may be the size of training data (which could not sufficiently capture the complexity of the problem space), or the choice of model hyperparameters.

What is Data Drift

Data drift is a change in the joint probability distribution of input and target variables of a dataset between two time instants. To further investigate this change, the joint probability distribution can be decomposed into different parts: input data probability distribution, prior probability distribution of target labels, class conditional probability density distribution, and posterior probability distribution of target labels. Data drift can be attributed to a change in one or more of these components, and according to which components are involved we can categorize drift into different types. However, as explained in the next paragraphs, this is not the only principle used to distinguish types of drift. This change can cause model degradation, and thus become a problem in ML systems, when it occurs between the training data and the data used for test or inference.

Causes

Data drift arises from a multitude of factors, such as: degradation in the quality of materials of a system’s equipment, changes due to seasonality, changes in personal behaviors or preferences, and adversarial activities. These causes for Data Drift are inherent elements of diverse real-world scenarios, and given the difficulty to predict or even detect them, in recent years drift detection and adaptation have been addressed in a vast range of domains and applications.

We can state that every ML system operating in a non-stationary environment has to address data drift, with detection and/or with passive or active adaptation of the model.

Types of Drift

As mentioned earlier, we can identify different types of drift, based on which components of the joint probability distribution of data change. This criteria, usually called Source of Change, is one of the two main principles used to differentiate drift, the other is the Drift Pattern, which it’ll be explained later in the paragraph.

Numerous terms and mathematical definitions can be found in the literature to describe the same data drift type based on the source of change, making it challenging for researchers to find a unique definition of a given drift type. However, also thanks to the work done in [Bayram et al.], it is possible to distinguish 3 main types of drift:

- Real Concept Drift: it refers to a change in the posterior probability distribution of target labels. It indicates a change in the underlying target concept of data, so it requires an adaptation of the model’s decision boundary to preserve its accuracy. This type of drift can be accompanied by changes in the input data probability distribution. However, if this is not the case, it can also be called actual drift.

- Covariate Shift: it refers to a change in the input data probability distribution. In practice, this type of drift and real concept drift often happen simultaneously. If it is not the case, it can be called virtual drift. Covariate shift can also manifest itself only in a sub-region of the input space (local concept drift), and can also be implied by an arisal of new attributes (feature-evolution).

- Label Shift: it refers to a change in the prior probability distribution of target labels. This can badly affect the prediction performance of the model if the change in the distribution is significant. Label shift can be caused by the emergence of new classes in the distribution (concept-evolution), or by the disappearance of one or more classes (concept deletion).

Drift pattern, on the other hand, categorizes data drift into 4 types based on the pattern of the evolution of the drift (i.e. the transition of a distribution from a concept to another). These are:

- Sudden Drift: when the distribution changes abruptly at a point in time.

- Incremental Drift: when the new concept takes over in a continuous manner during a transition phase, without a clear separation with the old one.

- Gradual Drift: when the distribution changes progressively, alternating periods of the new concept with periods of the old one over a transition phase, and at last stabilizing into the new one.

- Recurring Drift: when another concept appears periodically, similarly to gradual drift, but with the distribution never stabilizing into a single concept.

Possible Solutions

Now that we know what data drift is and what its consequences on the performance of ML models in production are, the next question is: what are the possible solutions?

As briefly mentioned before, data drift is handled by employing detection and/or adaptation techniques. There are two possible strategies for a data drift handling framework: the passive approach (also called blind adaptation), which uses a learning model that is constantly updated with new data instances, and the active approach (also called informed adaptation), which uses a drift detection component and a drift adaptation component that is triggered by the former.

The passive approach is made possible by employing the so-called adaptive learning algorithms. These include incremental algorithms as well as online algorithms, which process data sequentially and thus produce a model that is continuously updated as new training data arrives. On the contrary, active approaches employ algorithms that don’t intrinsically adapt to evolving data distributions. Firstly the algorithms detect when a data drift is happening using one of the vast selection of drift detectors and then trigger the adaptation mechanism for the learning model. This process typically involves a re-training job using more recent data.

Drift Detectors

Drift detection refers to the methods that help to detect and identify a time instant (or interval) when a change arises in the target data distribution. These methods typically use a statistical test to quantify the similarity between old and new samples. To assess the change in the concept and the significance of the drift, the similarity value is compared to a predefined threshold. This hypothesis testing uses the following null hypothesis: “the test statistic will not yield a significant difference between the old and new data”, meaning that no concept drift is detected. If failing to reject the null hypothesis, the system will make no change to the current learner and continue with the data stream.

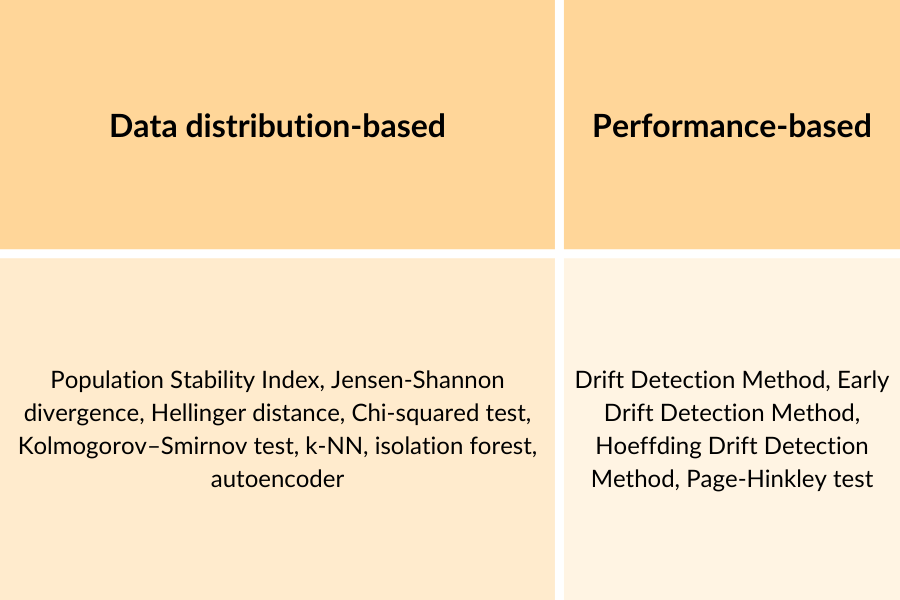

Studies on drift detection can be categorized depending on the test statistics they use to detect the change. The most popular approaches are:

- Data Distribution-Based Detection: it uses divergence measures between the data distributions in two different time windows to estimate their similarity. A drift is detected if the two distributions show significant divergence, using a threshold value. The main advantage of data distribution-based methods is that they can be applied to both supervised and unsupervised problems, as they only consider the distribution of data points. However, they can produce false positives because changes in the data distribution do not always degrade the model performance.

- Performance-based Detection: it is the most explored category and it forms the largest group of drift detectors. They detect variations in the model’s prediction error to infer changes in the data. These methods have the advantage of detecting a drift only when the performance of the model is affected. However, their main problem is that they require prompt feedback on the predictions, which is not always possible. Performance-based methods can be furthermore divided into three groups according to the strategy used to detect performance degradation: statistical process control (trace the “online” error evolution of the model), windowing techniques (use sliding windows to monitor the performance of the most recent observations, comparing it with that of a reference window) and ensemble learning (combine results from multiple different learners in an ensemble).

Below is a list of some drift detectors for both categories:

Machine Learning algorithms can also be used to detect data drift; some examples are: k-Nearest Neighbors, isolation forest and autoencoder. Typically used for anomaly detection, these methods can also be adapted for drift detection. They utilize a sliding window approach, where a sub-window trains the model, and a more recent sub-window is used to detect potential drift.

Drift detection is a fundamental component of model monitoring in production, part of the job of ML Engineers (or, more specifically, MLOps Engineers) when creating and managing an MLOps system. As already stated, model monitoring is crucial to ensure that our ML model (whether we’re fine-tuning an LLM or working with a traditional algorithm) stays operational in relevant real-world scenarios, without becoming obsolete. Many platforms offer MLOps functionalities, with each major cloud provider typically having its own solution, such as Amazon SageMaker, Google Cloud Vertex AI, and Microsoft Azure ML Platform. Additionally, the increasing demand for MLOps practices has led to the emergence of numerous other platforms, including Databricks, Modelbit, and Radicalbit, a Bitrock sister company.

During my internship at Bitrock, I worked extensively with the Radicalbit platform, conducting experiments with drift detectors and comparing batch vs. online ML models, which formed the basis of my master’s thesis.

Radicalbit is an MLOps and AI Observability platform that simplifies the management of the machine learning workflow, offering advanced deployment, monitoring, and explainability. The platform is also available as an open source solution that focuses on AI Monitoring featuring dashboards for Data Quality, Model Quality, and Drift Detection; it is available on GitHub.

LLM Drift

With the recent developments in the field of Large Language Models (or LLMs) an emergent topic of discussion is LLM drift. If we apply the formal definition of data drift to LLMs we can encounter some difficulties. Firstly because tracking their performance is not trivial, moreover they perform a long list of tasks (which are not really limited and pre-defined). However with the term LLM drift we’re not just referring to that. There are a few issues that have recently been studied in the literature- The first that comes to mind is probably the fact that these models are trained on huge batches of data and are not constantly updated, so if a model is used for many months (or even years) after its training, it can have significant limitations when producing results about topics that recently changed in the real-world or events that happened after its training. Projecting this scenario for many years, we could also include changes in natural language, such as new expressions or terms being increasingly more used or some terms being naturally deprecated by people.

Of course, we all know that popular LLMs are frequently updated with new information to avoid these types of issues. This has also been a topic of discussion and research, for example in [Chen et al.] which analyzes the changes in ChatGPT’s behavior (both 3.5 and 4) between March and June of 2023. They found that the performance and behaviors of both GPT-3.5 and GPT-4 can vary greatly over time. While evaluating models on different tasks (math problems, sensitive/dangerous questions, opinion surveys, multi-hop knowledge-intensive questions, generating code, etc.) they observed some improvements but also some significant degradations (up to 60% loss in performance), and generally many changes in their behavior in relatively small amounts of time. The authors also point out the importance of LLM Monitoring, especially for these services that, like ChatGPT, deprecate and update their underlying models in an opaque way. This also causes the so-called prompt drift, which is the phenomenon where a prompt produces different responses over time due to model changes, model migrations or changes in prompt-injection data at inference. In fact, recently there’s been the emergence of many prompt management and testing interfaces. The market clearly demands a way to test generative apps before LLM migration/deprecation and to develop an app to be somewhat agnostic to the underlying LLM.

Conclusions

Data drift poses significant challenges to machine learning systems in production. Understanding and addressing drift through effective monitoring practices (i.e. using drift detection methods and regular retraining with updated data) is crucial.

Drift detection is a key step in MLOps, a crucial field for taking machine learning models from development to production, and should be an integral part of any deployment plan, not an afterthought. It should be automated, with careful consideration given to the detection methodology, thresholds, and actions to take when drift is detected. Proactive drift monitoring and adaptation ensures your machine learning system remains valuable over time. Moreover, recent developments in LLMs have introduced the concept of LLM drift, which includes challenges in tracking performance due to their broad and evolving range of tasks, and issues with outdated training data affecting accuracy over time, highlighting the importance of LLM monitoring and prompt management.

Main Author: Nicola Manca, MLOps Engineer @ Bitrock